How might we help Visually Impaired individuals to dress effortlessly for any occasion, mood, or weather?

Introduction

Every morning, millions of people get dressed in seconds, using their sight to coordinate colors, textures, and styles. For someone who is blind or visually impaired, that simple task becomes a source of stress, dependence, and decision fatigue.

Role

UX Research, Strategy

Product Design

Accessibility Testing

Team

Me (Product designer),

Developer

Project tenure

12 weeks

Tools

Figma

Adobe Creative suite

Flutter

The challenge 🎯

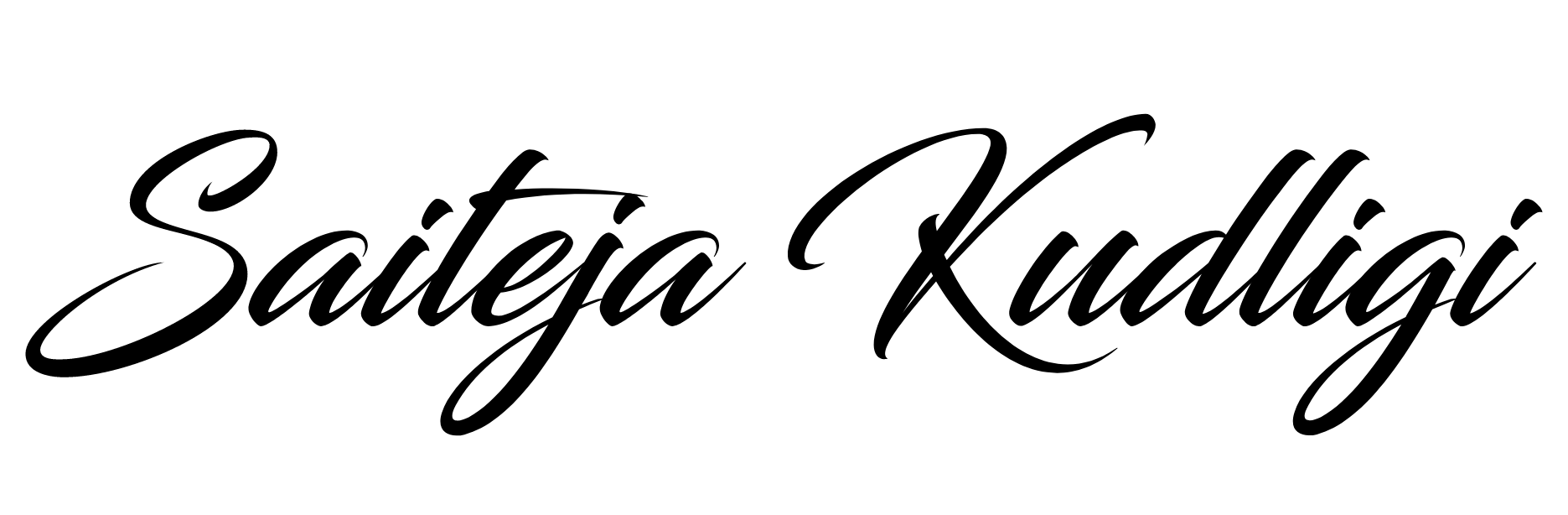

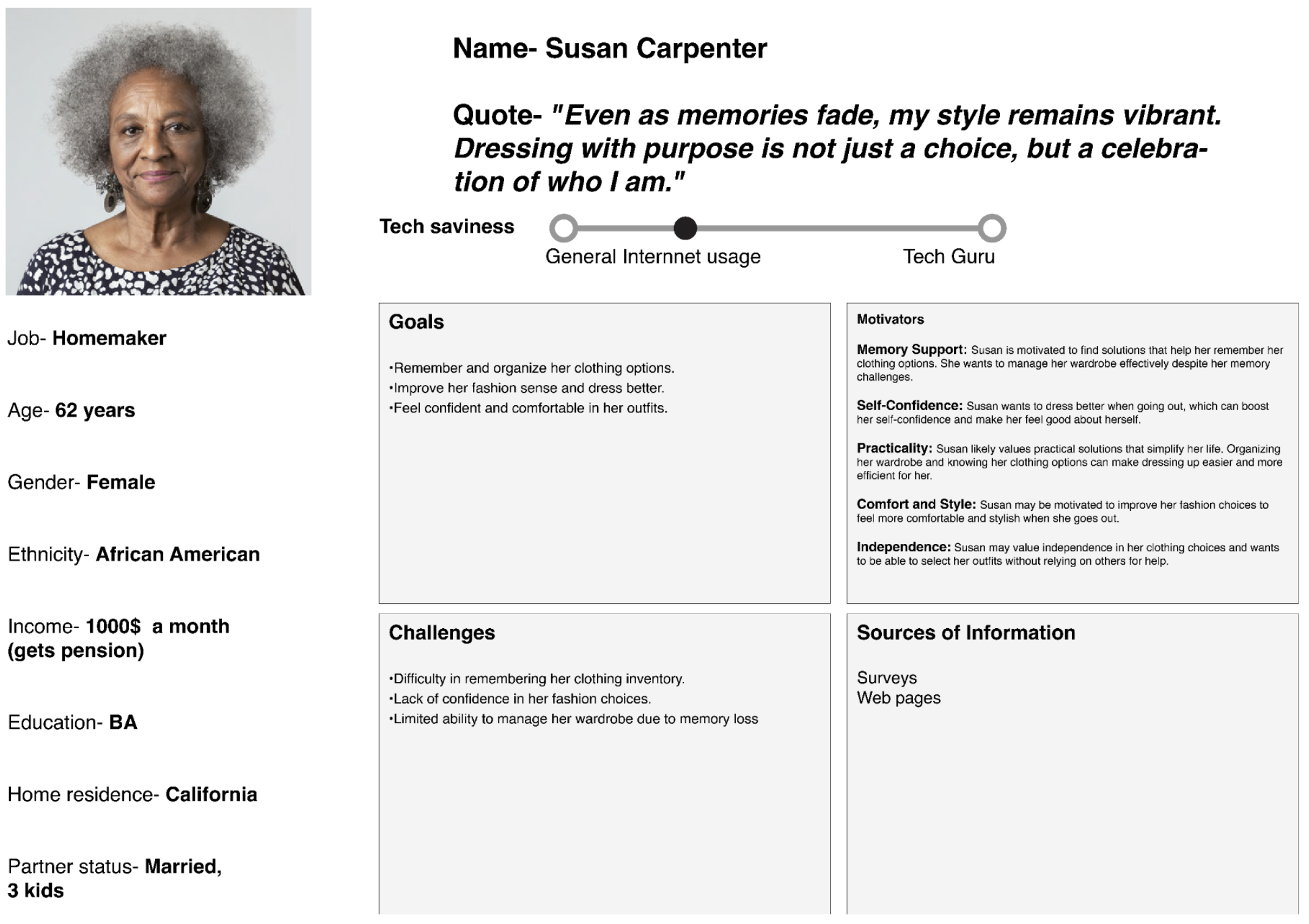

Visually impaired individuals often need help with their wardrobe, Apparel selection and Apparel pairing, reducing their independence and making daily routines less efficient.

The solution 💡

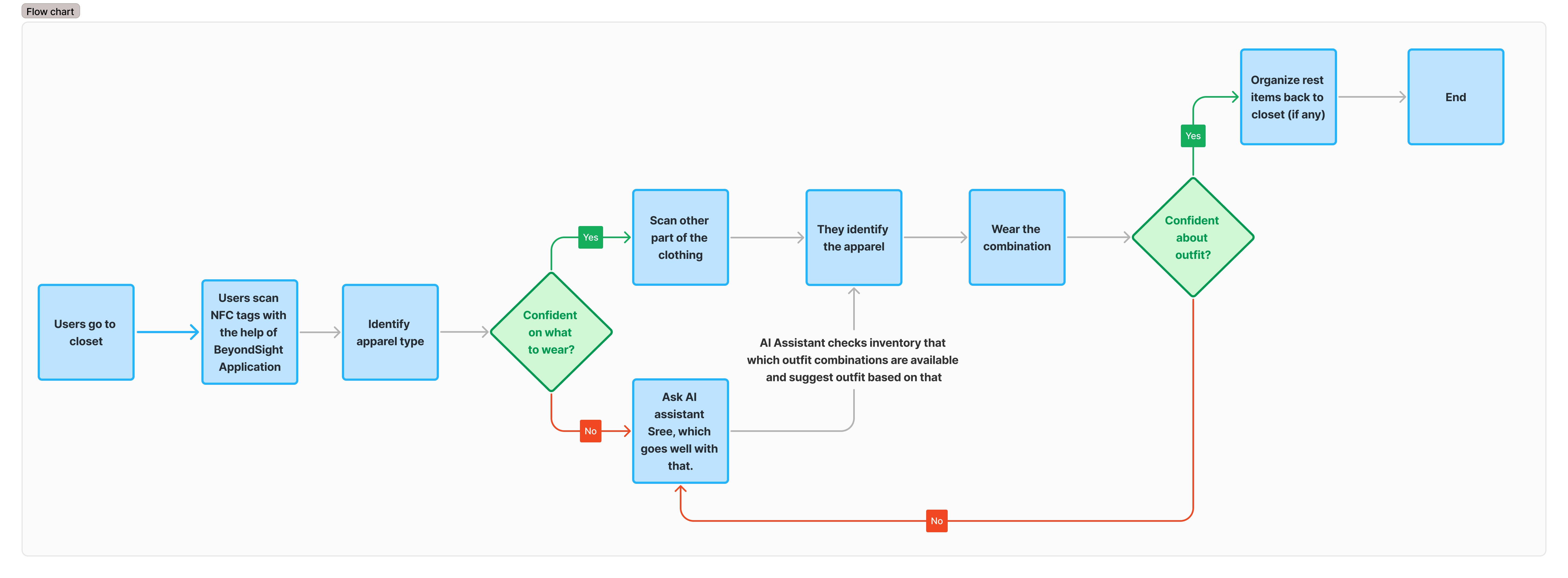

Design a mobile-first, AI-powered product to enable users to independently identify, manage, and pair their clothing using accessible, voice-first, and NFC-powered interaction.

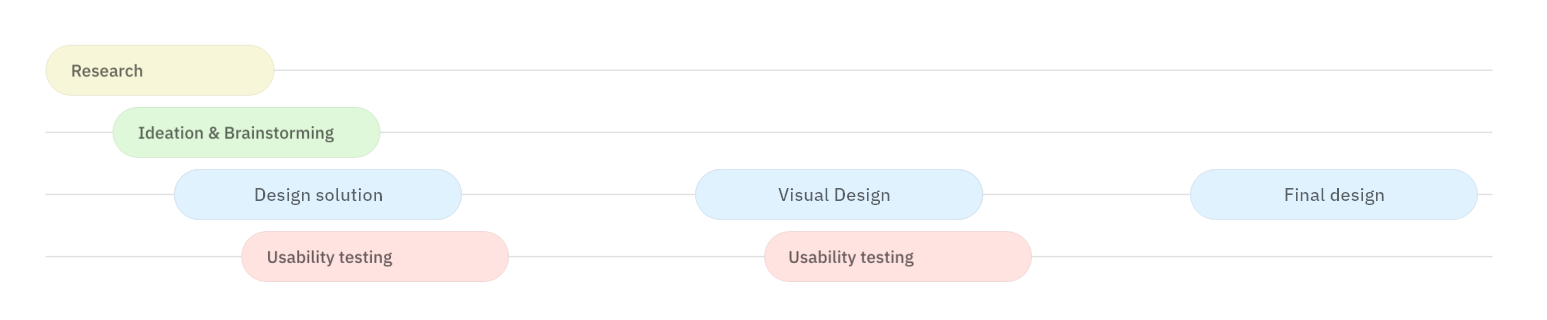

Timeline

A rough visual representation of the whole process from inception till measurement

Preface

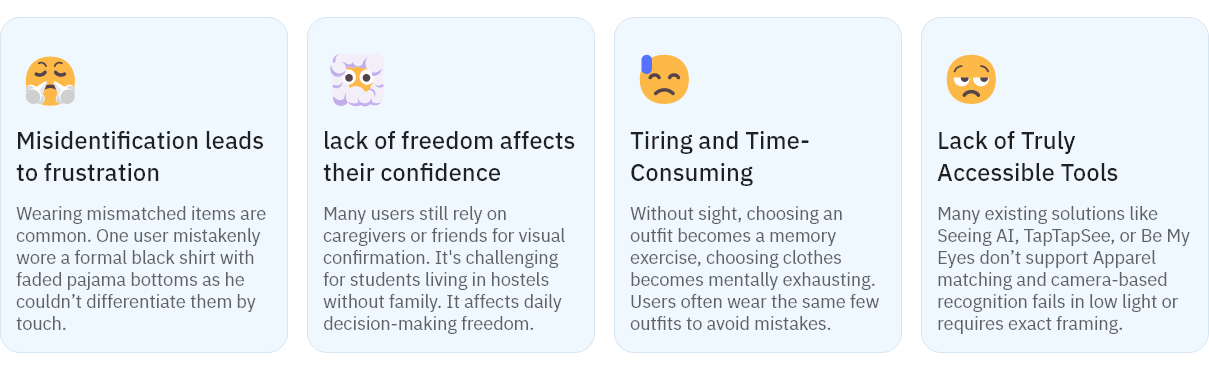

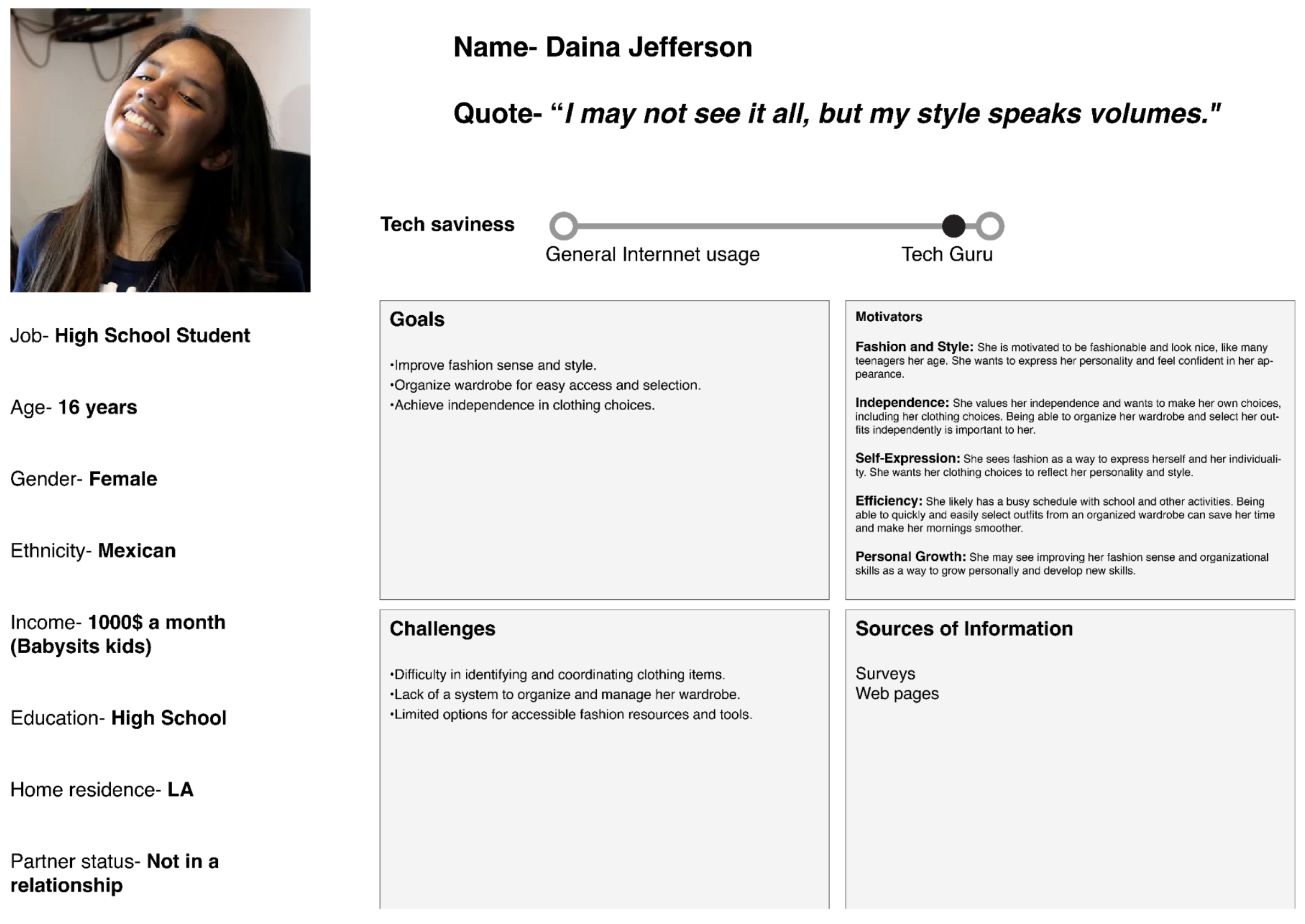

To build a user-centered experience, we anchored our process in both primary and secondary research, combining qualitative interviews, and quantitative data. This gave us a 360° view of the challenges and opportunities in wardrobe management for the visually impaired.

We conducted 7 semi-structured interviews, followed by 10 contextual observations. Participants included hostel students and working professionals. These diverse insights helped us deeply understand user needs, habits, and pain points, which helped us designing an inclusive solution.

Solution

Physical Prototype (Iteration 1)

Our initial solution was a Physical device which is powered by IOT. We have used RFID Technology to scan tags.

- Users have to attach RFID tags to clothes

- They can scan tags using RFID scanner, which gives audio feedback

Drawbacks of Using Arduino

While Arduino was useful for early prototyping, it has lot of constraints. While testing the prototype, we ran into several issues:

-

Cumbersome Tag Setup: Users had to manually switch between write and scan modes, which felt unintuitive.

-

No AI Capability: Testers wanted smart outfit recommendations, but Arduino couldn’t support AI features.

-

Limited Connectivity: Arduino doesn’t have built-in internet access—adding Wi-Fi/GSM requires additional chips, more chips = bigger the device.

Physical Prototype (Iteration 2)

We tried using ESP32 and Raspberry Pi to give AI suggestions using Wi-Fi and GSM. But it was too expensive (around $200–$250 per device) and hard to use. Setting up RFID tags was complicated and needed either a lot of learning or help from someone else.

So, we switched to NFC tags. They are cheaper, easier to use, and work directly with smartphones, no physical devices needed.

Visual Design (Iteration 2)

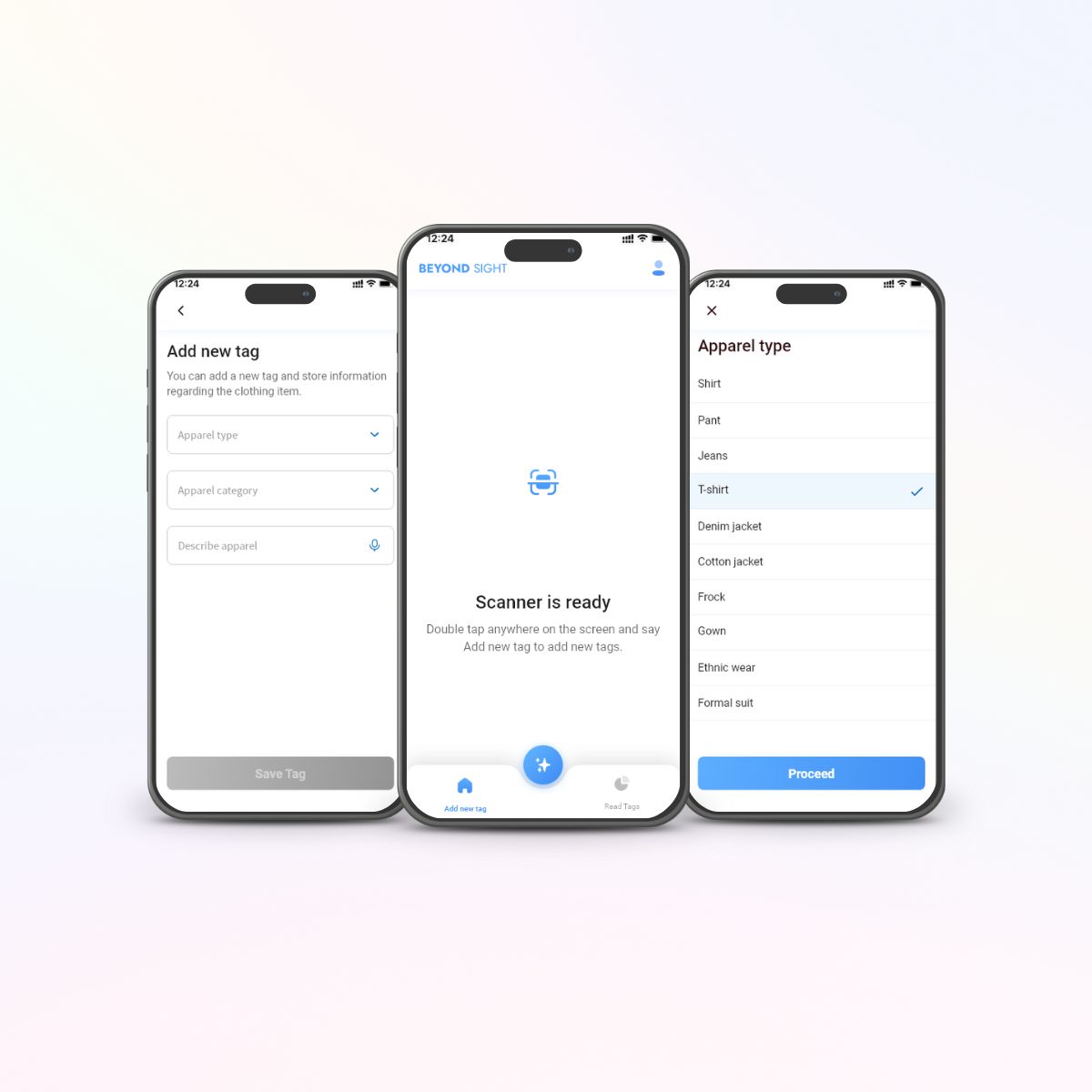

After exploring different hardware options and testing various prototypes, I decided to focus on a mobile application for both Android and iOS platforms. This decision was based on two key factors:

-

Smartphones already support NFC, making it easy for users to scan clothing tags without needing extra hardware.

-

Most users are already familiar with their phones, which reduces the learning curve and increases adoption.

Usability Testing

Phase 1 – India (Blind School, Aged 18–38)

We tested the visual and interaction design with 10 participants, most of whom were using NFC for the first time.

-

80% had no prior experience with NFC, yet all were able to complete the full flow within 5 minutes.

-

Some users found the bottom navigation bar confusing, as it wasn’t easily reachable or intuitive with screen readers.

-

Most users liked the voice input and TalkBack support, saying it helped them know what was on the screen.

-

A few people felt that the AI voice sounded robotic and suggested making it sound more natural.

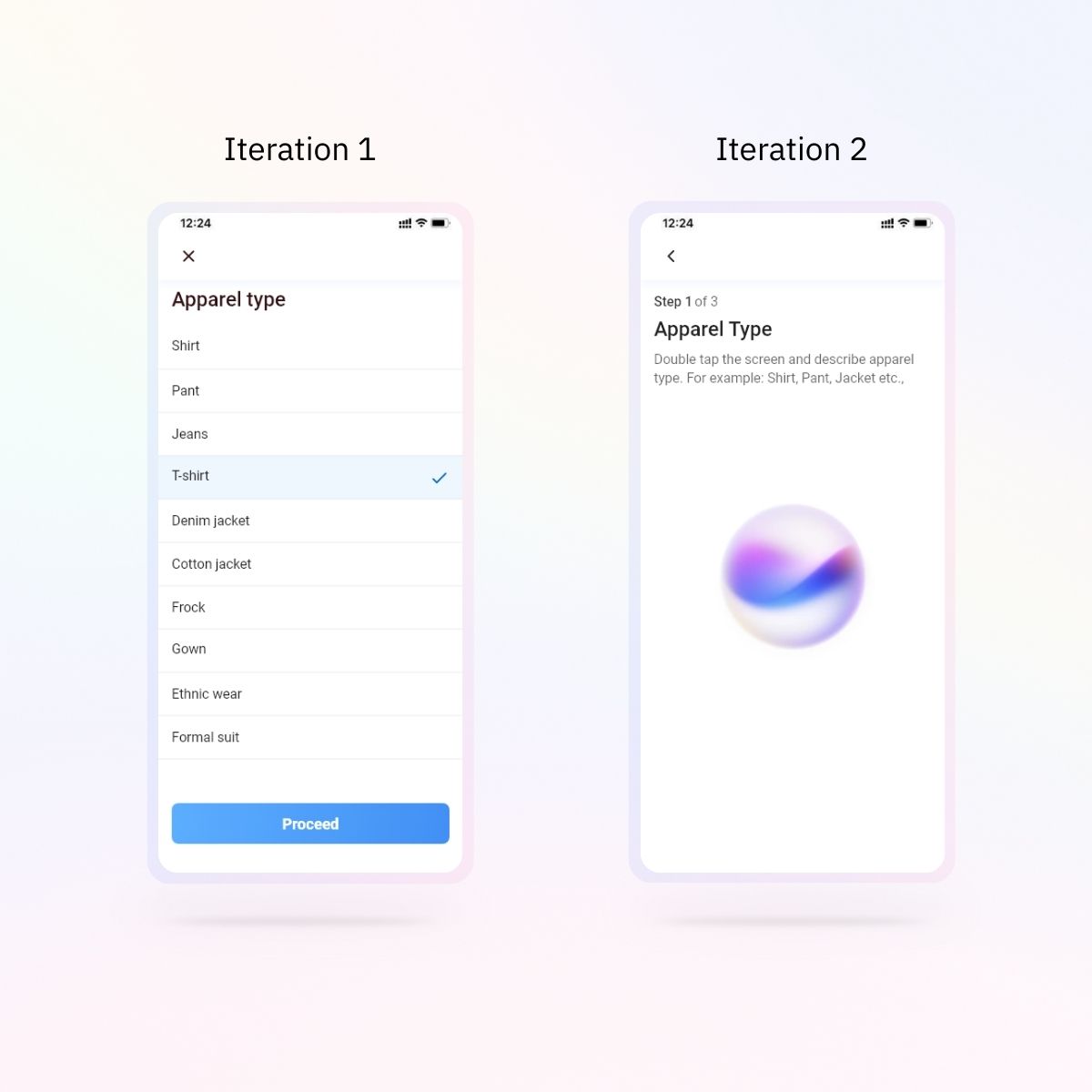

- Adding a new tag flow felt bit complex, as it has a long list to select apparel category.

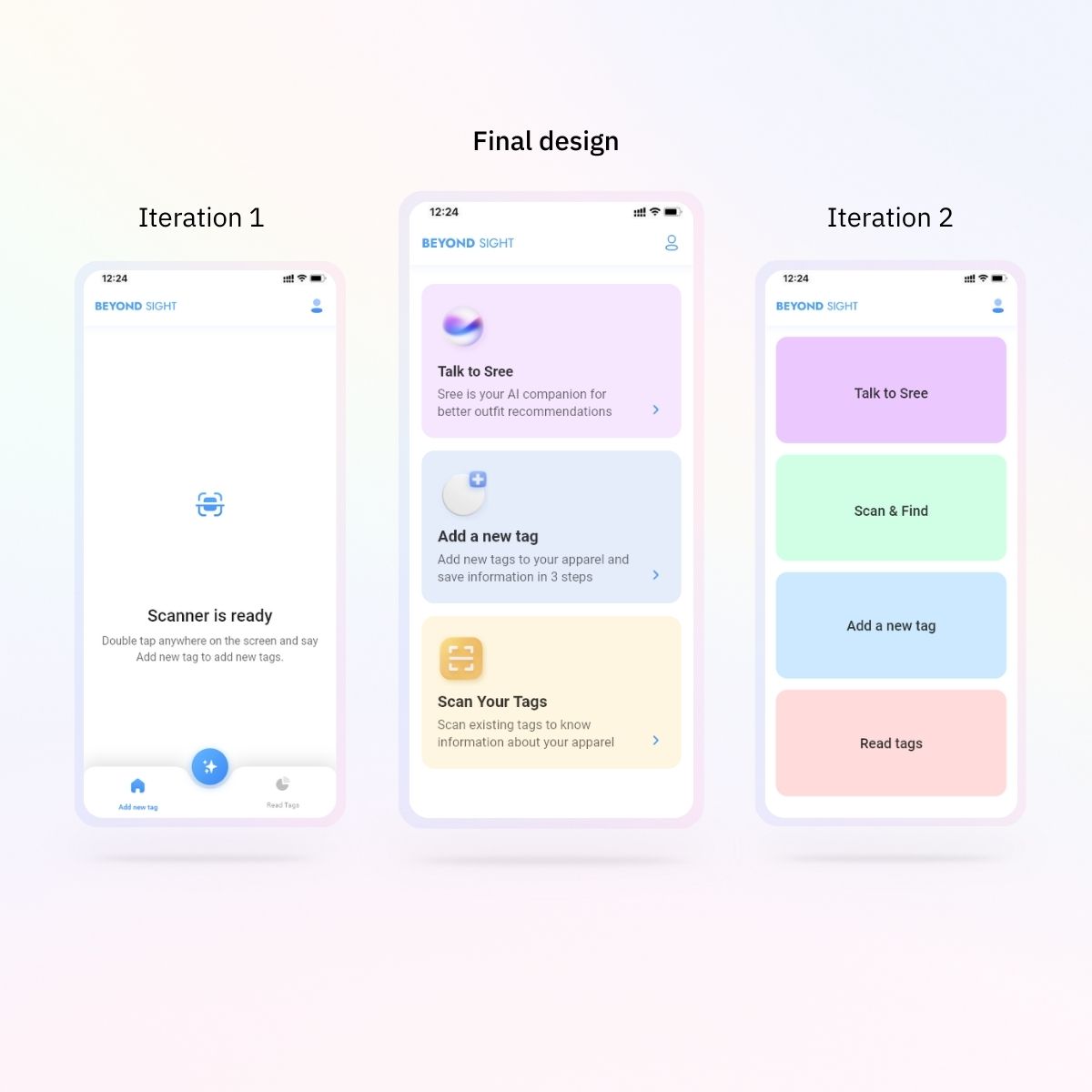

After testing the app with users, I made changes to the Home screen and the Add New Tag process.

-

I removed the bottom menu and added three huge tiles on the Home screen, to maximize the touch target. This made it easier for users to see and choose what they want to do.

-

I broke the Add New Tag flow into three simple steps. Before, it was hard for users to scroll through a long list and remember everything, which increased the cognitive load. So minimized the cognitive overload by simplifying the flow.

-

Many users found the list confusing and said it made them tired or stressed, to select when Accessibility mode enabled on their devices. So, I added a voice input option. Now, users can just say the item details, and the app uses AI powered speech-to-text to convert and save the information.

These changes made the app easier, faster, and more comfortable to use.

Phase 2

- 8 participants (6 blind, 2 low vision)

- 95% task completion on the first try (scanning, tagging, and AI conversation)

- Quote: “It felt like Siri—but better, and it remembered my clothes.”

Final Solution

Onboarding with Sree

- Voice-guided tutorial introduces the app’s three core modes: Read, Write, Ask

- Onboarding is skippable or repeatable based on user confidence

Read Tags (NFC Scanning)

- User taps phone to NFC-labeled clothing

- Receives haptic confirmation + voice description:

“White kurta with golden embroidery. Wedding outfit.”

Add Tags (Write Flow)

- Step 1: Speak the garment type

- Step 2: Describe it in detail

- Step 3: Mention the category or usage context (e.g., ‘formal’, ‘summer’)

Ask Sree (AI companion)

Context-aware outfit suggestions based on:

- Current weather via API

- Event or occasion (“wedding”, “presentation”, “party”)

- User preferences over time

Reflection

Designing Beyond Sight wasn’t just about solving a problem. It was about giving people back a sense of control and to make people independent. Fashion is personal, and for our users, it felt like something they had lost.

Accessibility isn’t about edge cases—it’s about designing for dignity.

“I don’t want to be stylish like someone else. I just want to feel like myself again. I’ll definitely use this app once it’s launched” – Rakesh, Blind Student